The objective of multiprogramming is to have some process running at all times, to maximize CPU utilization. The objective of time sharing is to switch the CPU among processes so frequently that users can interact with each program while it is running. To meet these objectives, the process scheduler selects an available process (possibly from a set of several available processes) for program execution on the CPU. For a single processor system, there will never be more than one running process. Iff there are more processes, the rest will have to wait until the CPU is free and can be rescheduled.

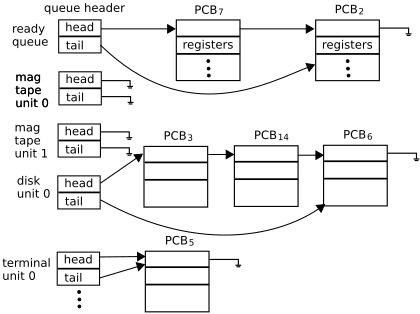

Figure 3.5 The ready queue and various device queues

As processes enter the system, they are put into a job queue, which consists of all processes in the system. The processes that are residing in main memory and are ready and waiting to execute are kept on a list called the ready queue. This queue is generally stored as a linked list. A ready-queue header contains pointers to the first and final PCBs (Process Control Blocks) in the list. Each PCB includes a pointer field that points to the next PCB in the ready queue.

The system also includes other queues. When a process is allocated the CPU, it executes for a while and eventually quits, is interrupted, or waits for the occurrence of a particular event, such as the completion of an I/O request. Suppose the process makes an I/O request to a shared device, such as a disk. Since there are many processes in the system, the disk may be busy with the I/O request of some other process. The process therefore may have to wait for the disk. The list of processes waiting for a particular I/O device is called a device queue. Each device has its own device queue (Figure 3.5).

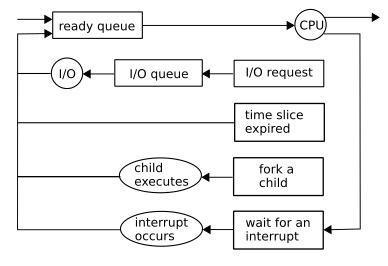

Queueing diagram representation of process scheduling

A common representation of process scheduling is a gueueing diagram, such as that in figure 3.6. Each rectangular box represents a queue. Two types of queues are present: the ready queue and, a set of device queues. The circles represent the resources that serve the queues, and the arrows indicate the flow of processes in the system.

A new process is initially put in the ready queue. It waits there until it is selected for execution, or dispatched. Once the process is allocated the CPU and is executing, one of several events could occur:

• The process could issue an I/O request and then be placed in an I/O queue.

• The process could create a new child process and wait for the child's termination.

• The process could be removed forcibly from the CPU, as a result of an interrupt, and be put back in the ready queue.

In the first two cases, the process eventually switches from the waiting state to the ready state and is then put back in the ready queue. A process continues this cycle until it terminates, at which time it is removed from all queues and has its PCB and resources deallocated.

About the Authors

Abraham Silberschatz is the Sidney J. Weinberg Professor of Computer Science at Yale University. Prior to joining Yale, he was the Vice President of the Information Sciences Research Center at Bell Laboratories. Prior to that, he held a chaired professorship in the Department of Computer Sciences at the University of Texas at Austin.

Professor Silberschatz is a Fellow of the Association of Computing Machinery (ACM), a Fellow of Institute of Electrical and Electronic Engineers (IEEE), a Fellow of the American Association for the Advancement of Science (AAAS), and a member of the Connecticut Academy of Science and Engineering.

Greg Gagne is chair of the Computer Science department at Westminster College in Salt Lake City where he has been teaching since 1990. In addition to teaching operating systems, he also teaches computer networks, parallel programming, and software engineering.

Operating System Concepts, now in its ninth edition, continues to provide a solid theoretical foundation for understanding operating systems. The ninth edition has been thoroughly updated to include contemporary examples of how operating systems function. The text includes content to bridge the gap between concepts and actual implementations. End-of-chapter problems, exercises, review questions, and programming exercises help to further reinforce important concepts. A new Virtual Machine provides interactive exercises to help engage students with the material.

Reader Adam Sinclair says, "I'm writing this review from the perspective of a student. I am finishing an Operating Systems course at university and I have to say this book is fantastic at introducing new concepts. If there is ever a conversation about OS, I always refer to this book. The content is very well laid out and organized in a way that can be read from beginning to end. There is no need to jump from one chapter to another (unless you want to skip sections)."

Reader Chetan Sharma says, "This book is bible for operating system knowledge. It covers very important concepts of Process Management and Memory Management. This book is good for all type of readers - Beginner, Intermediate and Advanced reader. Highly recommended for Students/Professionals/Readers who want to enhance their knowledge.

More Computer Architecture Articles:

• Computer Buses

• CPU Cache Basics

• Using the Microcontroller Timers

• Digital to Analog Convertion with a Microcontroller

• Intel Celeron D Processor

• AMD Sempron Processor

• Simplified Windows Architecture Overview

• Digital Logic Levels and Transfer Characteristics

• Capacitors in AC Circuits

• CPU Process Memory Address Binding