With both priority and round-robin scheduling, all processes may be placed in a single queue, and the scheduler then selects the process with the highest priority to run. Depending on how the queues are managed, an O(n) search may be necessary to determine the highest-priority process. In practice, it is often easier to have separate queues for each distinct priority, and priority scheduling simply schedules the process in the highest-priority queue. This is illustrated in Figure 5.7. This approach - known as multilevel queue - also works well when priority scheduling is combined with round-robin: if there are multiple processes in the highest-priority queue, they are executed in round-robin order. In the most generalized form of this approach, a priority is assigned statically to each process, and a process remains in the same queue for the duration of its runtime.

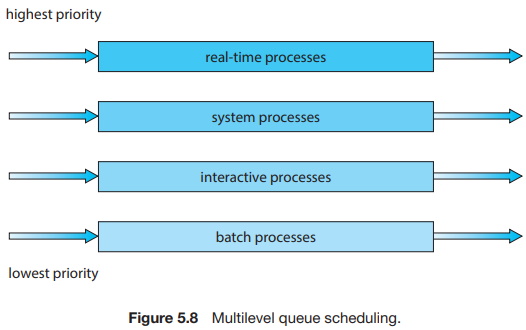

A multilevel queue scheduling algorithm can also be used to partition processes into several separate queues based on the process type (Figure 5.8). For example, a common division is made between foreground (interactive) processes and background (batch) processes. These two types of processes have different response-time requirements and so may have different scheduling needs. In addition, foreground processes may have priority (externally defined) over background processes. Separate queues might be used for foreground and background processes, and each queue might have its own scheduling algorithm. The foreground queue might be scheduled by an RR (Round Robin) algorithm, for example, while the background queue is scheduled by an FCFS (First Come First Served) algorithm.

In addition, there must be scheduling among the queues, which is commonly implemented as fixed-priority preemptive scheduling. For example, the real-time queue may have absolute priority over the interactive queue.

Let's look at an example of a multilevel queue scheduling algorithm with four queues, listed below in order of priority:

1. Real-time processes

2. System processes

3. Interactive processes

4. Batch processes

Each queue has absolute priority over lower-priority queues. No process in the batch queue, for example, could run unless the queues for real-time processes, system processes, and interactive processes were all empty. If an interactive process entered the ready queue while a batch process was running, the batch process would be preempted.

Another possibility is to time-slice among the queues. Here, each queue gets a certain portion of the CPU time, which it can then schedule among its various processes. For instance, in the foreground-background queue example, the foreground queue can be given 80 percent of the CPU time for RR scheduling among its processes, while the background queue receives 20 percent of the CPU to give to its processes on an FCFS basis.

About the Authors

Abraham Silberschatz is the Sidney J. Weinberg Professor of Computer Science at Yale University. Prior to joining Yale, he was the Vice President of the Information Sciences Research Center at Bell Laboratories. Prior to that, he held a chaired professorship in the Department of Computer Sciences at the University of Texas at Austin.

Professor Silberschatz is a Fellow of the Association of Computing Machinery (ACM), a Fellow of Institute of Electrical and Electronic Engineers (IEEE), a Fellow of the American Association for the Advancement of Science (AAAS), and a member of the Connecticut Academy of Science and Engineering.

Greg Gagne is chair of the Computer Science department at Westminster College in Salt Lake City where he has been teaching since 1990. In addition to teaching operating systems, he also teaches computer networks, parallel programming, and software engineering.

The tenth edition of

Operating System Concepts

has been revised to keep it fresh and up-to-date with contemporary examples of how operating

systems function, as well as enhanced interactive elements to improve learning and the student's

experience with the material. It combines instruction on concepts with real-world applications

so that students can understand the practical usage of the content. End-of-chapter problems,

exercises, review questions, and programming exercises help to further reinforce important

concepts. New interactive self-assessment problems are provided throughout the text to help

students monitor their level of understanding and progress. A Linux virtual machine (including

C and Java source code and development tools) allows students to complete programming exercises

that help them engage further with the material.

A reader in the U.S. says, "This is what computer-related books should be like. It is thorough, in depth, information packed, authoritative, and exhaustive. You cannot get this kind of excellent information from the Internet - or many other computer books these days. It's a shame that quality computer books are declining so rapidly in number. I hope they continue to update and publish this book for many years to come.

More Computer Architecture Articles:

• Operating System Boot

• ARM Cortex-A72 Registers

• Operating System Memory Allocation Methods

• Virtual Memory and Memory Paging

• Processor Interrupts

• Factors in Choosing an Oscilloscope

• Multilevel Queue CPU Scheduling Algorithm

• Direct Memory Access (DMA)

• Basic Arithmetic Logic Unit (ALU) Circuitry

• Change Raspberry Pi Default Configuration